Medical ethicists have long been troubled that racial disparities make their way into healthcare decisions, with worse outcomes for racial minorities and other at-risk classes.

Q3 2020 hedge fund letters, conferences and more

For example:

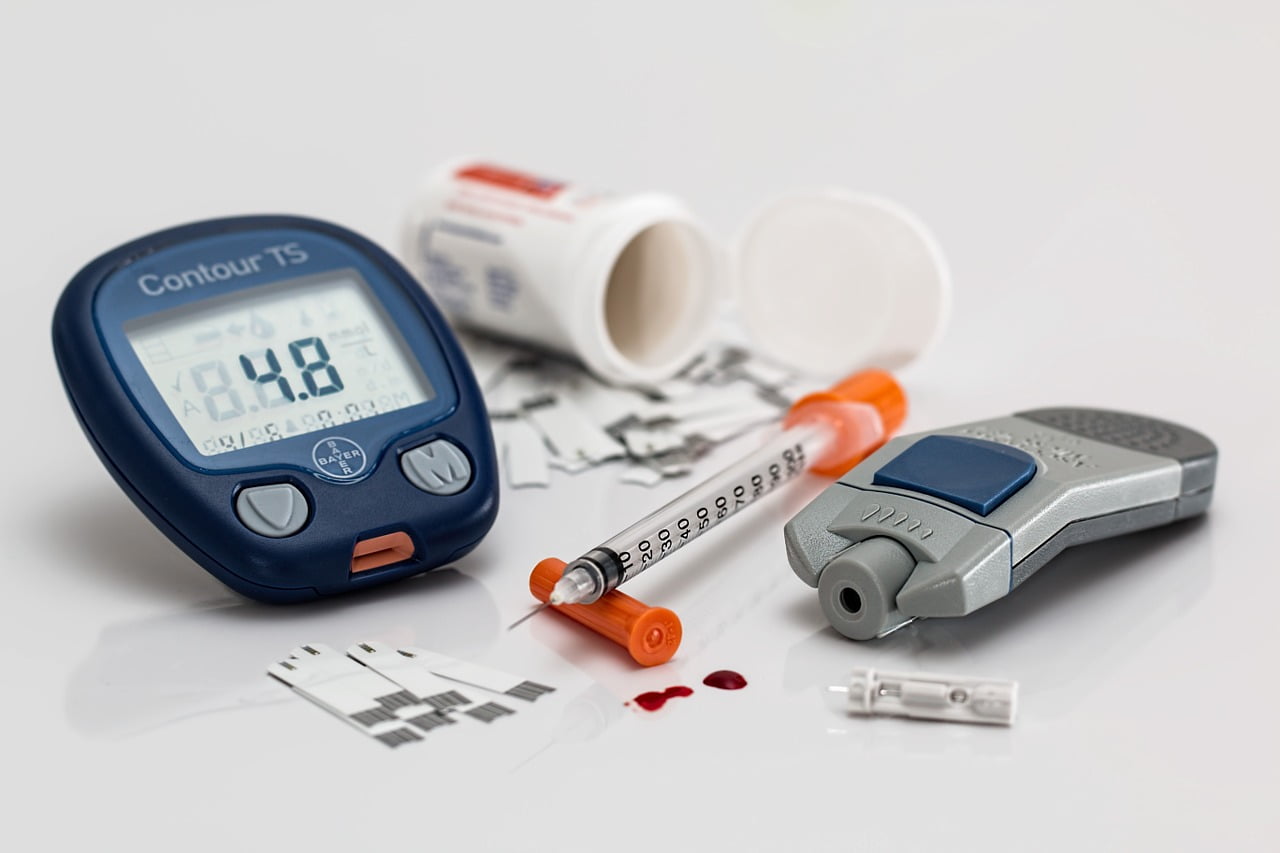

- Black patients in emergency rooms were 40% less likely to be prescribed pain management medication than white patients.

- Hispanic patients in emergency rooms were 25% less likely to be prescribed pain management medication than white patients.

- Obese patients are at risk of misdiagnosis if a provider attributes a condition to the co-existing condition of obesity, when a more serious underlying condition may be present.

A panel of experts on medical uses for AI (artificial intelligence) sat down at the beginning of December for a panel as guests of the Radiological Society of North America. The topic of the day—using AI to combat bias in healthcare and inequity of patient outcomes.

Despite the many positive uses of AI, this panel was not a victory lap; rather, the business was serious. The title of the panel: Artificial Intelligence and Implications for Health Equity: Will AI Improve Equity or Increase Disparities?

The title suggests the conflict that may occur in using AI in a healthcare context. How could AI increase disparities? It almost makes it sound like we’re expecting machines to be racist.

And yet, trouble is brewing on the horizon. Early adopters of healthcare AI face legal and ethical liabilities when the algorithms, confoundingly enough, reflect or even magnify troubling inequities.

Take the algorithm deployed by UnitedHealth to predict whether a patient would require extra medical care. Imagine their shock when the New York Departments of Financial Services (DFS) and Health (DOH) sent stern letters inquiring after evidence of racial bias.

It turned out that white patients were slated for extra care far more often than black patients, even when all other factors were comparable. In addition to the moral quandary, UnitedHealth’s AI was putting the company at risk of stiff fines and penalties under the state’s anti-discrimination laws--and this is an algorithm that UnitedHealth did not program to recognize race!

What was going on? Could a machine be “racist?” Race wasn’t even a factor in the algorithm’s decision-making. The answer, as you might expect, lay not in some inherent bias of the machine, but faults with the conditions human programmers had trained it to look for.

For example, the algorithm was taught to view past healthcare spending and consumption as an indication of future health needs. Black communities have historically had less access to healthcare, and as well have been less able to afford it. The algorithm was penalizing them for that lack, failing to recommend extra treatment regardless of whether or not they were equally likely to need it.

Artificial intelligence calls to mind killer robots who can think, but according to Healthcare Weekly, AI is just computer code designed to follow a logical process based on inputs and parameters. Machine learning is a subset of AI that gets better and better at making decisions the more input it receives—i.e. it learns from its past work.

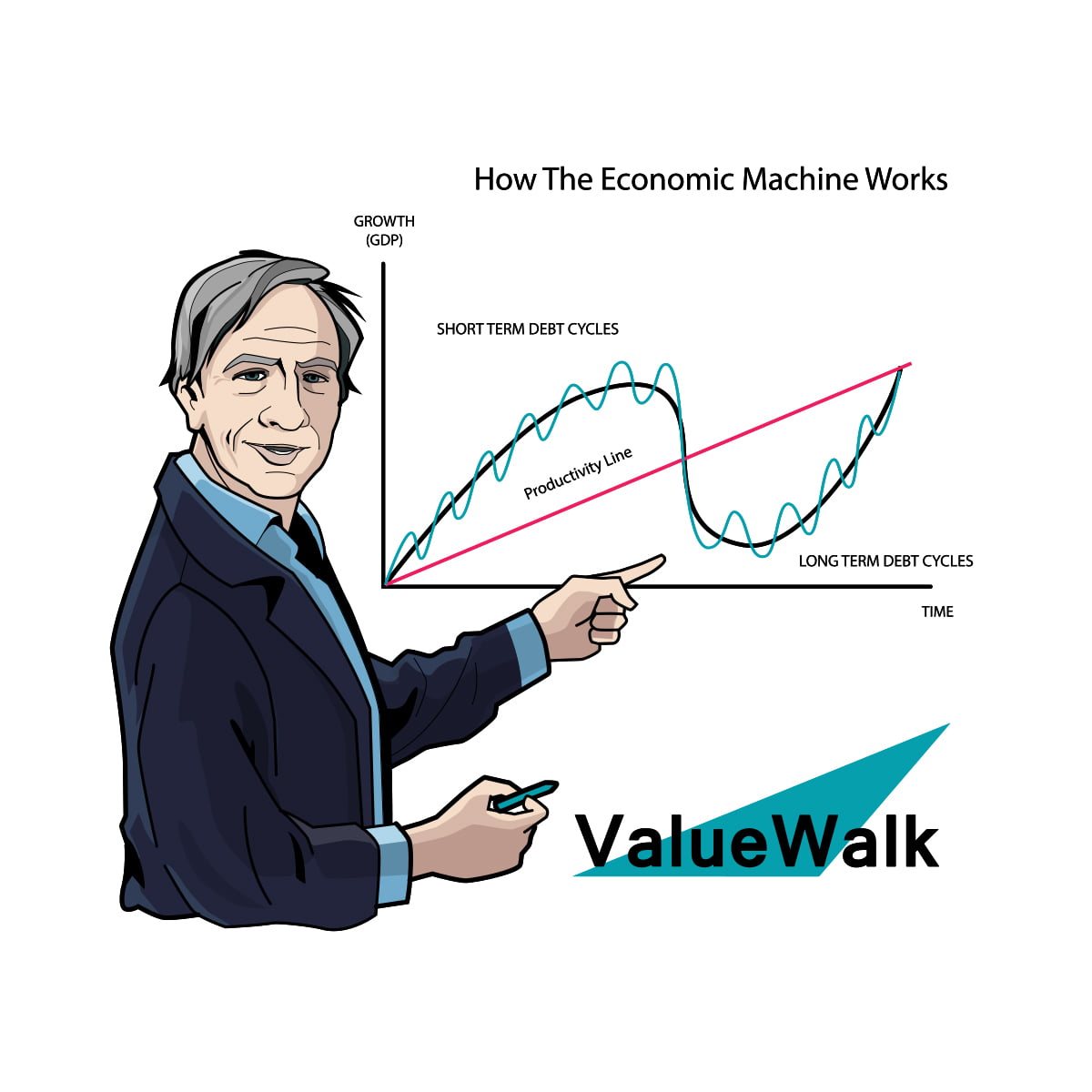

According to Value Walk, the goal is for the algorithms to make “decisions” based on evidence that once required a human brain to analyze and assess it. In fact, theoretically, AI can make these decisions better than any single human, because AI can flawlessly process vast sums of data in seconds—more than one human could process in a lifetime.

AI algorithms have made a huge impact on the healthcare industry. During the COVID-19 pandemic, AI was critical in the fastest-ever major vaccine development drive, as well as for patient triage and prediction of severe lung infections.

Of course, these machines don’t actually learn or think, so they are incapable of harboring racial prejudice. But humans set the parameters. In defining what AI algorithms ought to look for, humans can encode their own biases—or systemic biases—into the very machines designed to be perfectly impartial.

The panelists addressed these topics but also took an optimistic view of the potential for AI to alleviate bias in healthcare, rather than exacerbate it.

Constance Lehman of Harvard Medical School, for example, presented data on a new algorithm being used in breast cancer. Developed in partnership with Regina Barzilay, the DL (deep learning) app is used to predict a woman’s risk for breast cancer based on mammogram imagery alone. Not only did it produce more accurate predictions, but it fared better in terms of equity across racial lines than other predictive solutions for breast cancer.

The panelists emphasized the need to recognize healthcare disparities perpetuated by AI as opportunities. After all, it’s hard to change the mind of a committed racist, but it’s comparatively easy to edit computer code. Self-perpetuating biases in AI output could be treated like “bugs in the system” to be adjusted until the algorithms produced more fair and accurate outcomes across all groups.

Case-in-point: once UnitedHealth made corrections to their AI, the percentage of black patients that the algorithm recommended for extra treatment shot up from 17.7% to 46.5%.